From Smart Cars to Smart Care

Doctors working at the Michigan Center for Integrative Research in Critical Care are developing machine learning systems to aid doctors in their care of patients.

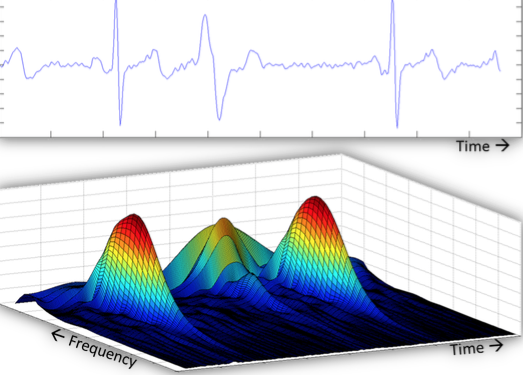

Figure 1A consists of images of three different screens. They show real time processing of physiological waveforms to recognize patterns and provide input to machine learning systems. At U-M, the computer systems can continuously record and store data from any given patient.

Artificial intelligence (AI), per the Oxford dictionary, is defined as “the theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.” You may not realize it, but AI is a major part of everyday life for most people: online shopping, mobile apps, and home devices are all often powered by AI.

There are many things that you may or may not realize use AI and machine learning systems: virtual personal assistants (Siri, Google Now, Cortana); video games; smart cars; purchase prediction (Amazon); fraud detection; customer support; news generation; security surveillance; music/movie recommendations; smart home devices (thermostat, oven); ridesharing apps (Uber, Lyft); autopilot on airplanes; email spam filters; plagiarism checkers; mobile check deposits; snapchat filters; facial recognition programs; voice-to-text programs.

Researchers are actively looking for ways to apply AI and machine learning to medicine. Dr. Ashwin Belle, Ph.D., Research Investigator of Emergency Medicine at the University of Michigan (U-M), uses AI and machine learning systems to help doctors at U-M and C.S. Mott Children’s Hospital (Mott) improve the quality of care they deliver to their patients.

In medicine, people who work with creating AI do not just use the data as is—they create systems that process the data to extract important features from it, “features [that] are often hidden amongst large amounts of lost data that is collected from patients,” Belle said. The systems can then match the current patient to a previous patient with similar symptoms, diseases, and treatments. From here, doctors have an idea of what might happen to their patient next based on what has or has not worked in the past.

“Artificial intelligence is an umbrella term, but that term also carries, unfortunately, negative connotation around it as well,” Belle said. “That’s perhaps because artificial intelligence seems like we’re replacing humans in many ways.” For example, millions of factory workers have lost their jobs to machines, which are quicker, faster, and cheaper than working humans. Looking at it quickly, AI doesn’t quite encapsulate what Belle is doing at U-M; it has to be looked at more as machine learning.

MACHINE LEARNING

“At the moment, if you use the word machine learning, it’s all about teaching the computer to learn,” Belle said. Machine learning is a type of AI where a machine gets taught how to learn without being explicitly programmed.

“You have different buckets of data,” Belle said. “Each bucket looks different. We’re trying to throw all of this, or maybe a subset of this, into the machine learning aspect, and asking machines to learn from it. In one way or another, it learns from it.” After it gets exposed to a large amount of data, any new data that gets added is automatically processed because the computer has learned how to do this previously. It just changes and adapts to fit this new information in with its understanding of the old information.

“Machine learning can help physicians, clinicians, and all kinds of caregivers provide the best treatment possible in the quickest manner no matter where they are,” Belle said. Even if it is out on the battlefield, where there are limited resources and people may be in disarray, physicians have to try and help everyone. For each person, they have to quickly assess the injury, figure out what resources are available, and try to save the patient’s life.

“Medicine is all of our understanding of how the body works, and there’s no end to the amount there is to understand,” Belle said. “Machine learning can get us there—at least partly there—in helping us understand how to decipher what the human body does and how it malfunctions.” With the help of computers fit with machine learning AI, it will be much easier to get a full understanding of the human body.

“In all honesty, I think there are some surprises it brings us,” Belle said. Sometimes machine learning computers will point out a particular element or factor that the doctors may never have noticed before. “The computer points out that it’s likely this piece of information will be useful in the care of a patient.”

A BABY BRAIN

Artificial intelligence is a field that has been used in the last 30 or 40 years. It was used to predict stocks and even fly airplanes using autopilot long before it was ever used in medicine. Though AI and machine learning hold great promise to revolutionize the way medicine is practiced, applications are still in the early stages of development.

“Medicine has been reluctant to adopt artificial intelligence so far, partly because it’s lives at risk,” Belle said. “How could you justify a computer making a mistake to the family of an extremely ill patient?” A main reason that AI is so new in medicine is that it’s hard to measure the quality of AI programs.

“If you think of a new medication coming to market, it takes about a decade sometimes because they do all kinds of clinical trials to prove that this medicine truly helps a majority of the people, while some might suffer from side effects that will be there no matter what,” Belle said. Trials are done to prove to organizations like the Food and Drug Administration (FDA) that new medications and devices are safe for the majority of people. Over the last decade, the FDA has started to understand that the use of AI is crucial to the development of medicine and care for patients, and they’ve started to develop ways to prove the same for AI.

“If you look at the history of medicine, even just modern medicine so to speak, and the history of where our artificial intelligence has been so far, it’s still in its baby phases,” Belle said. The end goal of AI in medicine is to be like the brain. In this regard, AI is still in its nascent stages. It has a long way to go before it can actually become something that strongly resembles the brain.

“This may be centuries away,” Belle said. “Perhaps we may never attain it because the brain is so complex. But we are definitely taking baby steps at the moment, and it’s a field that is growing and has potential to continue growing for the next few decades.”

The main difference at the moment between AI and the brain is simplistic versus fuzzy logic.

SIMPLISTIC VERSUS FUZZY

Fuzzy logic is the ability to take several factors and form an answer or solution to a problem based off of all these factors put together. In contrast, simplistic logic has two options: yes or no, more or less, black or white, for example.

For example, imagine a person driving in a self-driving car. In the middle of the road is a pole. They could swerve around the pole, but crossing the street from one side is an old woman, and from the other a dog is running into the street. The car has four choices: it can save the driver by slamming on the brakes; swerve to the left, hitting the old woman; swerve to the right, hitting the dog; or keep going and smash into the pole. This is not an easy decision to make for a human, let alone a car.

“At the most fundamental level, machine learning is still trying to decipher, for lack of a better phrase, between black and white: simplistic differences between objects, simplistic differences between scenarios, and simplistic differences between disease cases or normalcy versus not normalcy, in medicine,” Belle said. “But if you think of the human brain, which is the true intelligence, it’s not that simplistic. We are a lot more complex; we can evaluate several different elements at the same time to come up with one decision or one opinion or perhaps multiple opinions on the same subjects, so there’s a fuzzy logic to the way the brain works.” In medicine, fuzzy logic must be used to completely assess the problem at hand and come up with the proper solution. If developers can give machine learning more fuzzy logic, as opposed to the simplistic logic it currently has, computers will be much closer to resembling a human brain in the way they solve problems.

“That’s where fuzzy logic perhaps will help,” Belle said. “You need to build more complex thinking into these machines so they can make the right decision, perhaps closest to what we would have done in the same scenario. But this decision will be better because we make those decisions under stress and pressure, whereas computers don’t have those added factors.” In the scenario proposed earlier, simplistic knowledge is not sufficient; you’d need fuzzier logic to make the best decision. While applying fuzzy logic to machine learning remains the goal, applications with simplistic logic can still advance medicine, at least in this day and age.

Imagine another example, this time in a medical scenario: an intern is trying to perform a task, for example starting a central line. A senior resident or attending may correct their technique, tell them to do it a different way. The intern usually will trust their superior and change to do it the way they say.

“That’s because of the gestalt that their superior has accumulated over years of experience and having seen numerous similar cases,” Belle said. “Just having ‘been there and done that’ can lead to a fuzzy logic decision. In many cases, you don’t need to question that because you know that person has done these things before, so you go with it.”

An intern can completely trust a senior resident or an attending physician because they’ve done this many times and have built a good foundation of fuzzy logic. The same would not be true if a computer were to tell a senior resident or what to do. The resident or attending physician may question what the computer is recommending due to the fact that our society doesn’t have complete trust in computer software at this point in time.

BARRIERS TO SUCCESS

There are many barriers to successfully applying AI in medicine, but three main obstacles predominate: 1) proving that machine learning systems work well 2) getting physicians to trust and adopt machine learning systems, and 3) getting enough data to train the computer and prepare it for any form of any disease.

As previously mentioned, there is the issue of proving that these machine learning systems are working well. To prove this, computers learn from historical data and then try it on new patients to see if what has been learned works. “That makes sense logically,” Belle said. “But in the field of medicine, each patient is different. You can learn from a lot of different patients, but then when you apply it to a single patient, the outcome might be slightly different than what you thought it would be because there are certain nuances, certain complications with that patient that are different from what it had learned previously.”

The second main obstacle is creating physician trust in these computer programs.

“Convincing physicians that even though it’s a black box in nature, it’s doing well. And them trying to decipher the black box is one big issue,” Belle said. In this context, a black box just means any process where the contents and processes are mysterious to the user.

It is often in a physician’s nature to want to fully understand everything they are using. This is usually very good, and can lead to being a better doctor. But sometimes they must work with things that are very different from what they usually do, and for someone to understand what is happening in this black box would require years of additional training. Even then, doctors like Belle cannot understand machine learning systems to their full magnitude.

This trust concern leads to adoption issues; physicians who are not convinced, or who are skeptical in some ways, or want to learn more about it, may not adopt it right away. “Even though it’s working—it’s working well—they might not start using it,” Belle said.

It’s harder to prove that it’s functioning correctly if it doesn’t work the exact same way for every single patient. The reason for differentiation may not be the patient, it may be that the patient’s form of the disease is slightly different from previous ones, or that the machine learning system just doesn’t have enough data to work the same every time for every patient.

THE MISSING PIECE

“Machine learning as of today needs to learn from huge amounts of data in order to be accurate, and that data does not exist,” Belle explained. “Right now, we have started collecting it, but I think a few more years down the line we’ll have perhaps enough data where we can confidently claim that machine learning is working to it’s best.” In the state of Michigan alone there are several hundred community hospitals; the number of patients seen at U-M is far less than they see. But so much data is lost because community hospitals can’t collect and store all of the data they receive from their large amounts of patients.

“If you think about medicine, it’s in the last decade that we really started thinking about data as a commodity, as something that we need to store, to value, to cherish, to learn from,” Belle said. “Unfortunately, even if they’d thought of this earlier, there were no capabilities of doing that.” If there was a way to collect data from every patient that went to a hospital for anything in the United States, there would be the capability to learn much quicker.

Still, it won’t be easy. Especially for rare diseases where only a small percent of the population will ever come to a hospital, getting enough data to teach computers how to predict what will happen next for someone with that disease will take a very long time. “In order to decipher and unearth patterns that we’ve never seen before within that data, we need to wait for a great enough number of these cases to accumulate, get the quality data to accumulate for [a specific disease],” Belle said.

COLLABORATING WITH PHYSICIANS

The negative connotation related to replacing jobs carries over to replacing doctors as well. While it is commonly believed that AI and machine learning programs are starting to take over the work of doctors in the hospital, this is false. The point of AI, when used in a hospital setting, is to aid physicians with their decision-making process. Often times, the decisions made in hospitals are critical and time sensitive, and many factors need to be considered when making those decisions.

The hope of the doctors working at the Michigan Center for Integrative Research in Critical Care (MCIRCC) is that their machine learning devices can quickly grab and translate the necessary information to make it easier for the doctors to make their decisions.

“I think being part of the University of Michigan, which is a phenomenal school for both medicine and engineering, I get to really be in the medley of two fields, and I get to interact with both sides very equally,” Belle said. Especially at MCIRCC, physicians are an intricate part of the design and development of their products. Ultimately, these products will be used by physicians, so Belle and his colleagues want to make sure that they do not end up developing solutions that will not help.

From the get-go, physicians are working hand-in-hand with the software developers at MCIRCC to fully understand the problem, find a solution, and design and develop that solution. Along the way, they are constantly checking and correcting the developers if they are doing it wrong. The physicians also act as beta testers and help design the final user interface to make it feasible in a clinical environment. “So yeah, every step of the way they’re there holding our hands,” Belle said.

THE FINAL FRONTIER

The classic TV show Star Trek illustrated a concept of what medicine would be like in the future: the tricorder. It looks like a small, portable radio that gets held up in front of a patient. It’s detachable “sensor probe” scans patients to collect bodily information and diagnose diseases.

Belle thinks that though the future of AI may not be a fancy tool like the tricorder, “it still is something that we need to strive for,” Belle said. “If we can quickly diagnose problems, quickly figure out what the best solution is so that the caregivers can do their appropriate thing in a timely manner, I think that’s amazing.”

Belle considers the “final frontier” of medicine to be never falling sick, never getting injured, and even never aging. So while AI and machine learning may not be the “final frontier” of medicine, Belle believes that something like a tricorder is possible, which could greatly increase the quality of care physicians provide for their patients.

Her life outside of the journalism lab consists of one thing: gymnastics. It takes up all of Abbie’s “free time,” but she wouldn’t be who she is today without having spent almost 13 years in the sport. Abbie also loves listening to music — believing that there is at least one song for whatever mood someone is in — and spends much of her money on going to concerts, her happy place. She simultaneously wants May (aka graduation) to come quickly, but also take its time; clearly, she’s terrible at making decisions, so it’s a good thing she has three other editors to help her through this crazy process!